Paper The human voice aligns with whole-body kinetics published

22 May 2025Our paper The human voice aligns with whole-body kinetics has been published by Royal Society B!

A summary of the content can be found here.

Our paper The human voice aligns with whole-body kinetics has been published by Royal Society B!

A summary of the content can be found here.

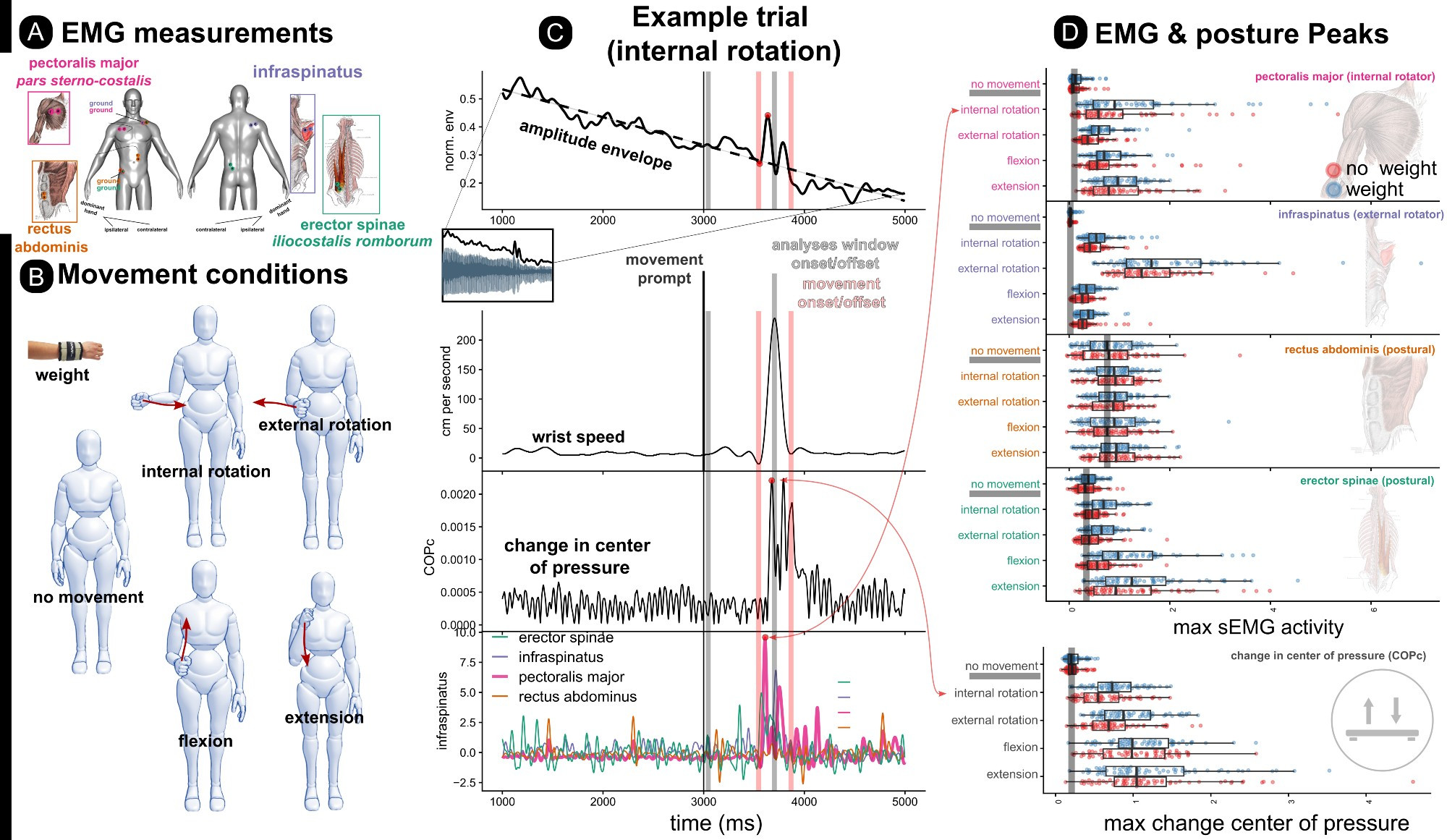

Although I left academia a year ago, I have some publication news! A paper I worked on together with Wim Pouw, Lara S. Burchardt, and Luc Selen has been accepted for publication by the Royal Society B. We looked into how the biomechanics of gestures influence the voice, using a load of multimodal signals including audio, 3D motion tracking via video (for movement), EMG (for muscle activation), RIP (for breathing via torso circumference) and a plate to measure center of pressure (for posture). We show that moving the arms (while manipulating mass) recruits (postural) muscles that interact with the voice through respiratory interactions.

While waiting for publication, the postprint is already available and so is the RMarkdown showing the methods and results. Wim also made a great post about it on Bluesky.

In April 2024, I will be starting a new role as a usability engineer for the usability lab at Johner Institut in Frankfurt a. M. I am very excited to get some more industry experience and to learn more about usability and medical devices!

Work I have been involved in has been accepted to two conferences for 2024. In May, Movement-related muscle activity and kinetics affect vocalization amplitude will be presented at EVOLANG 2024 in Madison, USA. In July, we will present Arm movements increase acoustic markers of expiratory flow at Speech Prosody 2024 in Leiden, NL.

Preprints for both can be found and accessed here.

My dissertation The phonetics of speech breathing: pauses, physiology, acoustics, and perception has been published and is available online as open access.